The promise of generative AI and ChatGPT for L&D

)

Unless you’ve been hiding under a rock for the past few months, chances are you’ve caught one or two (or seventeen) conversations about artificial intelligence (AI) and ChatGPT. If you’re attending Learning Technologies at ExCeL London, then you’re sure to catch a few more on the topic.

AI and machine learning (ML) strategies have been quietly employed in many pieces of technology for decades. But this explosion of public interest marks a potential turning point for society as more and more people begin to grapple with the growing reality—and approaching ubiquity—of AI.

Learning and development is no exception. So, let’s take a look at how AI will impact the industry, the macro trends at play, AI’s increasing role in improving L&D operations, as well as its risks, limits, and more.

How AI will reshape online learning

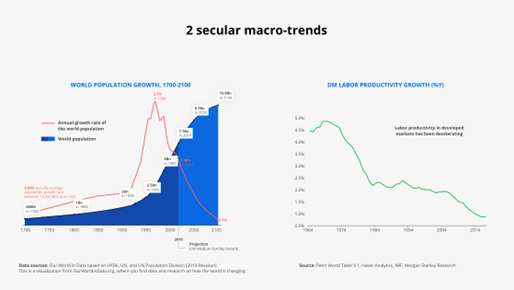

There are two key macro trends that L&D professionals need to keep an eye on (whether they’re interested in AI or not):

- Employable people are decreasing as a percentage of the population

- Overall productivity in the workforce is declining

Macro trends for L&D professionals to watch

Consequently, employee retention and talent acquisition strategies are more important than ever. Keeping people around longer, increasing their productivity, and attracting top talent have always been key, but when you take these macro trends into account, the stakes are even higher.

L&D teams are responsible for improving employee retention and productivity, as well as talent acquisition. That’s not new. What is new is how AI can help achieve these goals. They can leverage AI and machine learning tools to improve operations in the following ways:

- Automation of tedious tasks, freeing up administrative time and improving accuracy.

- Hyper-personalisation of L&D material, matching people and needs faster and seamlessly serving multiple distinct audiences.

- Content generation that helps reduce overhead and improve accessibility.

- Conversational business intelligence, providing quicker and better access to insights.

Examples of AI’s increasing role in improving L&D operations

AUTOMATION

Imagine doing the same thing over and over and over. Without a break. All. Day. Long. While it might seem boring to us, it’s what computers were designed to do. The first major commercial uses for AI and ML were generally in automation, and will likely lay the foundation for adoption for years to come.

In L&D specifically, automation via AI might look like:

- Auto-tagging. Classifying 12 pieces of content is no problem for a human. But what about 12,000? What if the classification parameters need updating on a regular basis? This is a job for an algorithm.

- Transcribing. Video-based learning is huge, and with the TikTok generation on the rise, we can only expect the amount of video to increase. But manual transcription takes time. Modern AI can perform video-to-text transcriptions with impressive accuracy and speed.

- Translating. Google Translate works in a pinch, but if you need to convert a full course into multiple languages, you’ll need more firepower. AI can create very good first drafts for native speakers to review, saving a lot of time and money.

- Search indexing. Making things easy to find is no small feat when dealing with data and content. Social learning can be especially messy, since a lot of the value is created organically with minimal structure. AI and ML tools can work in the background to create order out of chaos, reducing the time needed to find the information for a human (or another system).

Automation is more than simply time-saving. It is an optimiser of human attention and skill. Most people don’t enjoy mundane, repetitive tasks (and we can get tired and make mistakes). Offloading the digital grunt work to machines frees people up to tackle more creative and strategic challenges.

HYPER-PERSONALISATION

One-size-fits-all solutions aren’t created because it’s what people want. They’re created because they scale. In L&D, this looks like one instructor trying to manage 35 students. Or 3,500 employees completing the exact same security training.

Most people would benefit from 1:1 coaching and mentoring versus the ‘crowded classroom’ approach. But that’s expensive. Often prohibitively so.

AI and ML can help reduce the scaling issue via hyper-personalisation. It can’t get to the level of 1:1 coaching (yet), but it can help filter out a lot of the superfluous and irrelevant content a learner doesn’t want or need, and it can adapt to user behaviour and dynamically adjust in response.

Here’s an example of how this could play out in L&D:

A UX/UI developer is navigating her company’s L&D system. Based on the completion rates of previous courses, her title, the activities of her peers, and her own self-defined goals, the AI recommends a custom-built curriculum. As she moves through these courses, the AI detects a bias for a certain kind of programming language (e.g. time on articles, assessment results, etc.).

Depending on the programming of the AI, the bias could either be reinforced (show her more courses that use this language) or be countered—depending on the overall L&D strategy. Or maybe nothing is done about the bias and it’s simply reported as an observation.

CONTENT GENERATION

This topic has been getting a lot of attention lately. Tell an art AI like Midjourney to draw a picture of a cat in the style of Picasso or ask ChatGPT to write you a sonnet about your dog in the style of Shakespeare and you’ll get what you ask for. As entertaining as this may be, L&D content developers would do well to understand how they might leverage these tools to enhance (not replace) their output.

Here’s how generative AI tools like ChatGPT and Shape (Docebo’s AI-based content creation tool) could simplify content creation:

If you were to prompt ChatGPT to write a short article on ‘the most important things a new salesperson needs to know,’ it would generate an article in seconds. After reviewing the AI output to fact check, tweaking the content, and polishing the language, you could feed the content into Shape. By now, you’d realise it’s time for a tea, so you’d go make one. By the time you got back to your desk, Shape’s AI would have produced a deck complete with visuals. After a few minutes of reviewing and refining the deck, you could get Shape AI to create audio voiceover (for accessibility purposes) and translate the deck into multiple languages.

From a single prompt, you now have several assets in multiple languages with audio voiceover files to go with them. If you did this job manually, it would take days (or longer, in many cases). But with AI, it takes minutes. And the best part is, aside from the initial prompt and a few quick reviews, AI does the bulk of the work.

If you think this sounds too good to be true, then come by Bitesize Learning Zone 1 on 4 May @ 14.40 to catch a real-time demo of this exact scenario.

CONVERSATIONAL BUSINESS INTELLIGENCE

Reporting, dashboards, analysis… these are the staples of business intelligence (BI) and underpin many major decisions.

Conversational BI refers to using AI prompts to crunch numbers and surface visual data easily, cutting down on time wasted asking for and waiting on reports. An L&D administrator could use conversational BI in the following way:

They could ask an AI tool to show them course completions for last month in all major categories except for security training. It would produce an accurate table. The administrator could reformat the table into a bar graph or pie chart with a single click, or even ask the AI tool to extrapolate the data and compare it to last year’s results.

Conversational business intelligence in action

(Of course, for all of this to work, the underlying database needs to be well maintained and accurate. Yet another job for AI!)

The risks of AI for L&D

For all its promise and convenience, AI isn’t without its risks. Here are a few things to consider:

- AI is not conscious and has no sense of ethics or morality. It will simply do what its programming dictates, and that programming is written by fallible humans. Blindly trusting AI outputs is a mistake: There should always be a ‘human-in-the-loop’ to scrutinise.

- AI can amplify biases, prejudices, and other inequalities. We already see this happening on social media platforms. If an algorithm is designed to learn what content ‘engages’ you, and it doesn’t differentiate between ‘happy engage’ and ‘angry engage’ then it may ‘optimise’ towards filling your feed with content that angers you (with no regard for whether or not the content is true or good for you).

- It’s a disruptive and relatively new technology. This always carries risks—we’re not sure exactly how it will evolve, how it might be regulated, etc. Unintended consequences are the norm with fresh tech, so a healthy dose of risk management should accompany any AI/ML strategies.

WATCH the webinar recording Generative AI and ChatGPT: Reshaping Online Learning with Claudio Erba (CEO and Founder, Docebo) along with Fabio Pirovano (CPO and Co-Founder, Docebo) and Massimo Chiriatti (CTO/CIO, Lenovo).

VISIT Docebo at Learning Technologies, ExCeL London in Theatre 1 on 3 May at 10.15 and 4 May at 12.30 (or come by stand F10) to get a unique take on how emerging AI technology will play a key role in L&D, and how you can harness the power of AI to work smarter (not harder).

Mike Byrne

Vice President Sales, EMEA, Docebo

)

)

)

)

)

)

)

)

)

)

)

)

)

)

.png/fit-in/500x500/filters:no_upscale())

)

)

)

)

)

)

)

)

)

)

)